The AI Discovery That Wasn’t: A Cautionary Tale of Hype and Integrity

It was as if the author of the paper on AI had meticulously crafted the exterior of a luxurious Rolls-Royce, only for it to be discovered that under the hood it was powered by a Hot Wheels motor.

Artificial Intelligence (AI) has been the hottest subject in town, at least since ChatGPT went viral in November 2022.1 Every other person is using some type of AI (e.g. generative) or writing about it, while major corporations are over-hyping it to sell new products that most people cannot even wrap their head around. Alas, just like the internet, AI is here to stay and has demonstrated the potential to continuously change how we do things.

Earlier this year while I was doing research for my previous job, I came across an academic paper that really caught my attention. This promising paper, published as preprint2 in arXiv and submitted to The Quarterly Journal of Economics, had received widespread attention, including coverage from the Wall Street Journal and The Atlantic, due to its groundbreaking claims about AI boosting scientific discovery. However, it turned out that this paper, written by a former second-year MIT PhD student in Economics and allegedly championed by a Nobel laureate, was eventually taken down by MIT. The paper, titled Artificial Intelligence, Scientific Discovery and Product Innovation, can still be downloaded in ResearchGate.

The paper claims that AI-assisted scientists in a materials science lab were able to discover new materials, which resulted in new patents and more product innovation. While scientists became more productive, the results also indicated that they felt less satisfied with their work due to skill underutilization and decreased creativity.

I am an economist and have no training in materials science. To me, the paper was ambitious and –for the most part– well written, the empirical strategy seemed robust and the findings were very interesting and relevant. So, what happened? I needed to find out what went wrong.

In this Substack I delve into the rise and fall of a widely discussed (preprint) paper on AI's impact on scientific discovery. The first section discusses the relevance of the paper and outlines its main claims. The second one explains why those findings, if true and valid, would have been highly significant in the field of Economics. The third section explains the methodological aspects that proved most notable. The fourth section discusses the controversy that led to MIT’s unprecedented withdrawal of endorsement. Lastly, the fifth section offers a reflection on AI adoption research, informed by my thoughts while preparing this article.

1. The AI Hype: Groundbreaking Claims

This paper, published as preprint in late 2024, had three key forces in its favor:

The title was catchy: it connects AI with scientific research and innovation.

It truly was innovative work: according to the author, this was the first paper providing causal evidence of AI’s impact on real world R&D.

It was housed in a top-notch Economics department: a footnote on the first page of the paper acknowledges the guidance and support from Daron Acemoglu and David Autor, two of the world’s most renowned economists from MIT.

The paper made big claims about the transformative potential of AI in scientific research. It “demonstrated” that the integration of an AI tool within a materials science laboratory led to a substantial increase in scientific discovery and product innovation. Intuitively, the results made sense: equip researchers and scientists with AI tools and they will become more productive and make discoveries. According to the paper’s abstract:

AI-assisted researchers discovered 44% more materials, resulting in a 39% increase in patent filings and a 17% rise in downstream product innovation. These newly identified compounds were described as possessing more novel chemical structures and contributing to more radical inventions. These compounds possess more novel chemical structures and lead to more radical inventions. However, the technology has strikingly disparate effects across the productivity distribution: while the bottom third of scientists see little benefit, the output of top researchers nearly doubles.

The paper also shows that AI impacts how scientists allocate their time to perform certain tasks. Results suggest that the AI tool enabled scientists to spend more time in judgment and experimentation tasks, and less time in idea generation.3 Moreover, the paper also showed that scientists experience a reduction in satisfaction with the content of their work, likely due to skill underutilization and reduced creativity.

2. Economic Implications: Why These Findings Mattered

First and foremost, this would have been among the first rigorous studies to confirm the positive effects of AI adoption on product innovation, and, consequently, on productivity. While the link between innovation and productivity has long been studied4, AI remains a nascent field of research in Economics. This paper would have been highly regarded in the academic literature and enriched the policy dialogue around the use of AI.

Economists emphasize productivity because it is the main driver of economic growth over the long term. In Layman’s terms, productivity means doing more with less. Paul Krugman, a prominent American economist, noted that “a country’s ability to improve its standard of living over time depends almost entirely on its ability to raise its output per worker.” Many economists agree that the only way countries can raise their standard of living sustainably is by producing more with existing or fewer resources5 - that is, by being more productive. In fact, this report by the World Bank notes that productivity accounts for half of the differences in per capita GDP across countries. When fostered ethically and adequately, productivity is good.

Countries and firms cannot just buy productivity, but it can be induced by investing in R&D, fostering innovation or by targeted policies, among other actions. I will not get into the details behind the argument that productivity drives economic growth, but instead I want to focus on a key driver of productivity: innovation.

Innovation in technology, production processes and product variety can fuel sustained productivity growth.6 These are issues at the core of the paper. The claims that AI-assisted scientist research resulted in increased materials discovery, patent filings and downstream pattern innovation suggest a significant boost to productivity. Such improvements could also have positive spillovers in sectors like healthcare and manufacturing. These claims in favor of AI would have strongly supported forecasts predicting that AI will accelerate global economic growth in the coming years.7

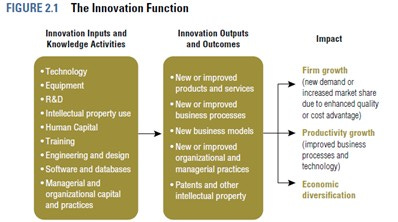

The following graphic breaks down innovation into inputs and outputs, and illustrates the relationship between innovation and productivity growth.

The finding that the impact of AI depends on scientists’ judgment would have been a remarkable insight.8 Again, intuitively this makes sense: a more knowledgeable scientist could have a more fruitful back-and-forth with AI as they could ask better-informed and more nuanced questions. This finding emphasizes that AI is not a standalone solution, but rather a powerful tool whose effectiveness is amplified by human expertise. Further, if the quality of AI output is tied to the knowledge and skill of the human collaborators, then continued investment in human capital becomes necessary to maximize gains from AI.

The economic promise held by the paper's claims was major. Had its findings been real and validated, they would have provided compelling empirical evidence for the transformative power of AI in driving innovation and, consequently, productivity, long-term economic growth and improved living standards. This would have not only advanced the nascent field of AI economics research but also provided guidance for policymakers seeking to harness AI's potential to foster productivity.

3. Apparent Methodological Rigor: Randomization in a Real-World Setting

The paper’s empirical strategy seemed broadly robust. The author uses data drawn from a large number of scientists over time, in what appears to be a randomized controlled experiment in an R&D lab. This next part is a bit technical but the main points to consider are in bold.

The sample size is large. The analysis used data from 1,018 scientists (which is a lot of scientists!) in the R&D lab of a large U.S. firm covering the period between May 2020 and June 2024, including pre-treatment and post-treatment periods.9 This real-world setting provides an excellent background to conduct an empirical analysis. In econometrics, a large sample size is good because it enhances the statistical power of the models and, in some cases, the generalizability of findings (i.e., can the findings from this sample be applied to a broader population).

The AI tool used by the scientists was randomly assigned to them. This way the author was able to group scientists according to whether they received the AI tool or not. On average, both groups are similar in terms of observable (e.g., age, education degree, etc.) and unobservable (e.g., curiosity to try AI) characteristics. Randomization is good because it supports statistical inference by minimizing confounding variables and reducing selection bias. In other words, the only difference between scientists in each group is the use of the AI tool.

Professional presentation. As with most cited papers in Economics, the author uses graphs to illustrate the effect of AI on an outcome of interest, regression outputs to quantify the impact of AI and is explicit about the use of fixed effects and robust standard errors. The paper includes a section on heterogeneity in which the author addresses how the impact of AI varies according to scientists’ levels of productivity and skill. The author is also candid about caveats to the paper’s results.

Comprehensive approach to AI. The inclusion of both quantitative metrics (such as the number of materials discovered and patent filings) and qualitative measures (like time allocation and job satisfaction) indicated a comprehensive approach to analyze the impact of AI. This dual perspective acknowledges the multifaceted impact of AI, moving beyond efficiency gains to consider the broader human and organizational consequences.

After outlining the main claims, the economic implications and the methodological strengths of the paper, it becomes clear why economists were enthusiastic about it. So, what happened?

4. The Unraveling: Why MIT Withdrew Its Endorsement

Given the hype created by both AI and the study, it was only a matter of time before the paper reached a non-economist audience.

Although this paper was written under the umbrella of MIT’s Economics department, the data used comes from an R&D lab that specializes in materials science. This field blends insights from physics, chemistry and engineering to create novel substances and incorporate them into products. We know that the paper was well received by economists, but what about scientists that know about materials science?

Multiple sources (see here, here and here to cite a few) reported that things started going south in January 2025, when an unnamed computer scientist with experience in materials science approached MIT professors with questions about the AI tool used in the lab and its actual impact on the reported innovations. Unable to provide answers to the queries, MIT released this statement in which they noted that they “conducted an internal, confidential review and concluded that the paper should be withdrawn from public discourse.” The release also included a statement from the professors who initially backed the paper, which said the following:

Over time, we had concerns about the validity of this research, which we brought to the attention of the appropriate office at MIT. In early February, MIT followed its written policy and conducted an internal, confidential review. While student privacy laws and MIT policy prohibit the disclosure of the outcome of this review, we want to be clear that we have no confidence in the provenance, reliability or validity of the data and in the veracity of the research […] Ensuring an accurate research record is important to MIT. We therefore would like to set the record straight and share our view that at this point the findings reported in this paper should not be relied on in academic or public discussions of these topics.

The main issue was the data. Or at least that is the main issue that was made public. Author Aidan Toner-Rodgers, who is no longer at MIT, wrote a very ambitious empirical study using suspicious data. Another Substack article, which very nicely dissects the paper, suggests that the data was entirely fabricated by the author. This might be right. All findings were spotless and unambiguous. Maybe too good to be true? It is indeed very strange that the company did not publish the findings themselves or have a high-level scientist appear as co-author of this paper.

While this is not a standard rule across the board, a good number of papers in Economics include download links to underlying datasets and the Stata/R coding used. Given how groundbreaking this study appeared to be at face value, it is strange that these supplements were not made available. However, it could be the case that while the paper was live on arXiv, the data and codes were downloadable and I just missed them.

5. Food for Thought

Using a car analogy, it was as if Toner-Rodgers had meticulously crafted the exterior of a luxurious Rolls-Royce, with polished chrome and a plush interior, only for it to be discovered that under the hood it was powered by a Hot Wheels motor.

It is very unfortunate that this paper was more hype than anything else. I genuinely believe in the power of AI to do good things for humanity and our planet.

Mistakes happen in research papers. Authors acknowledge them and issue corrections. But this is a much more serious issue – MIT basically shut down the paper, questioned the integrity of the data and (very likely) expelled the PhD student. While it is true that the paper had yet to be peer-reviewed, it received wide coverage and support from some of the most renowned economists. Did the economics discourse get carried away by the AI hype? I would not be surprised if it did. Was the paper endorsed too early? Definitely. What would have happened if this paper had been housed at a less renowned academic institution? I am not sure. In any case, the significant attention it received will hopefully call for higher ethical standards for future research in the field.

The rapid acceptance of the paper, despite its preprint status, reveals a growing appetite for positive AI narratives. This case embodies the challenges of upholding integrity in an environment characterized by pressure for novel discoveries and widespread public excitement for emerging technologies.

The debacle of this empirical study underscores the serious ethical responsibility of researchers and reviewers. This debacle highlights the need for sound ethical training, strong institutional oversight and a culture that prioritizes integrity over sensationalism. The future of AI, including the perceptions and decisions we make about it, demands an even higher standard for ethical conduct and verification.

In the meantime, research that evaluates the adoption of AI in the natural and social sciences would benefit tremendously from multidisciplinary reviews.

A preprint is a draft of an academic article shared online before it undergoes the formal peer review process.

Specifically, before the AI intervention, scientists reported spending 36% of their time to idea generation, 26% to judgment and 42% to experimentation. After the introduction of AI, these shares become 13%, 35%, and 47%, respectively.

For example, the Innovation Paradox report by the World Bank compiles a wide range of literature around innovation, productivity and economic growth.

International Monetary Fund. (2024). Finance & Development: A Quarterly Publication of the International Monetary Fund - Volume 61, Number 3. https://www.imf.org/en/Publications/fandd/issues/2024/09

Robert Zymek. (2024). Back to Basics: Total Factor Productivity. https://www.imf.org/en/Publications/fandd/issues/2024/09/back-to-basics-total-factor-productivity-robert-zymek

For example, see this report published by the EU. Particularly, the section “Economic potential of AI.”

Specifically, the paper claimed that “scientists with strong judgment learn to prioritize promising AI suggestions, while others waste significant resources testing false positives.”

In this case, treatment refers to the adoption of the AI tool.